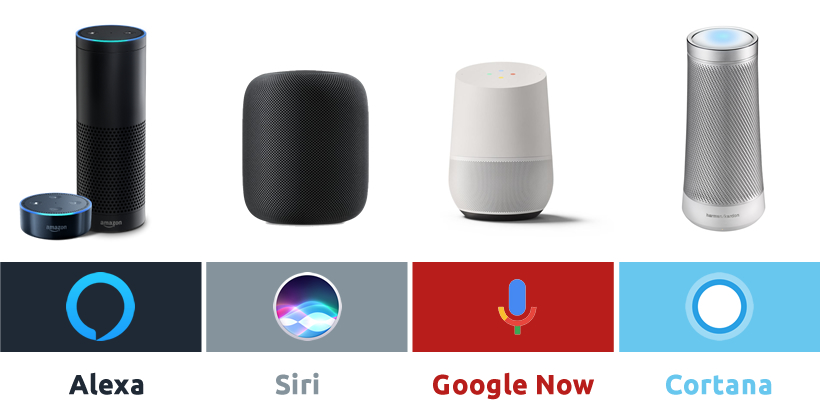

Voice User Interfaces (VUI) allows for hands-free, eyes-free, and efficient interactions that are more human-like in its core than any other form of user interface. As these new technologies emerge, we find more synergies than ever in the human-technology relationship. With these new technologies, devices are exponentially becoming a bigger integrated part of our daily lives. This is easy to see as virtual assistants like Siri, Cortana and Alexa have brought VUI into the mainstream, with corporate giants like Google, Baidu and Sonos following their lead.

As the technology improves and as new technologies are being created, the human-tech relationship will continue to forge an even closer relationship and dependency.

Arthur C Clarke, the acclaimed science fiction author of the legendary 2001: A Space Odyssey and the creator of the character HAL 9000 – an artificially intelligent computer that is capable of speech, speech recognition and natural language processing once said;

“Any sufficiently advanced technology is indistinguishable from magic"

As VUI requires nothing more than a vocal command to carry out tasks or answer questions, the magic in tech is becoming mainstream, as the user barely needs to lift a finger. Ta-da.

50 years has passed since the release of the movie and book by the same name, and today voice-based interfaces are a reality thanks to technological advancements, access to data and advanced language processing algorithms. On the user side of things, we are getting used to smart hardware devices such as smart watches, smart speakers, smart phones, and smart everything-else.

Anyhow, back to the tech side of things. Voice-based interfaces are all powered through cloud services and thus needs to be connected to the internet at all times to work. While connected to the internet the user can wake up the smart hardware to begin an interaction by using a certain sentence such as “Alexa” or “Hey Google”. The rest of the conversation is where the magic happens. The words spoken after the wake word is called an utterance and from this point – everything you say will be passed to the cloud. Speech recognition is activated based on the user’s voice command. Simpler put, the algorithm converts the user’s speech to text. It would be a fairly straight forward process, until we mix in different ways of pronouncing words and variations in accents. In order to being able to handle this the speech recognition algorithm must be rather advanced and use linguistic and semantic analysis on the data that it collects in order to train it to accurately to be able to interpret commands correctly.

After the user’s speech is accurately interpreted and it has been translated into text the machine needs to understand what is actually being said, or the intention of the same. This is where Natural Language Processing (NLP) steps in. NLP is essentially converting the human language to something more structured and more suitable for a computer.

The technology itself is not something new as it’s the same principles that can train chatbots, meaning that NLP matches a user’s message to a predefined intent. The challenge (and what is generally considered as the ‘training part’ – or machine learning) is to match the variety of different utterances with the right intent as humans have a variety of ways to ask for the same thing. For example, people express themselves in different ways dependent on personalities, culture and even dependent on which generation they belong to. Your grandpa will most likely express himself differently to you, as language, as well as culture, is ever evolving.

Let’s give an example of how a service such as Siri really work. It’s a hot day in Stockholm. The woman above wonders how warm it is and asks Siri the question:

- 'How hot is it in Stockholm?'

While there are several different ways of asking that question, this would seem a pretty normal way of asking it.

Now NLP algorithms parse what the user says to also pick out slots that basically is any variable that completes an intent, such as ‘in Stockholm’. The name of the city is a variable and is the slot in the user’s utterance. To take it one step further, the woman could use multiple slot values, such as adding another timeframe to the above question; 'What’s the temperature in Stockholm tonight?'. The more advanced the NLP models are, the better the strength of a conversational interface is, which in turn means that their natural language processing is more advanced.

An even more complex sentence would be if the woman would ask more than one question. That would mean more slot values and variables to consider. Thus, a more advanced sentence to interpret the real intentions behind. Such as;

- 'What’s the temperature in Stockholm tonight and where can I grab something to eat?'

Anyhow, let’s get back to how the computer interprets the data. The intent and the corresponding slot values are packaged into a data structure that is passed along to a service that can handle these various intents. This is when the service interface connects with a suitable service to handle them. As an example; When asking about the temperature, one obviously wants to receive that information from a reliable source such as a weather service.

However, as we previously mentioned the information retrieved is still in text form and needs to be converted into speech since it’s a Voice User Interface. Usually the cloud services that power these voice interfaces have pre-recorded spoken words that can read the results out. However, in order to make it sound somewhat natural and conversational, these services use a markup language that is programmed especially for speech synthesis. Without this, the ‘voice’ would sound rather robotic and stiff. It instead mimics the way we would otherwise speak; enabling the use of emphasis, fill-out-words, weird pauses and even accents that belong to a certain region. This allows for a more ‘human’ kind of conversation than can be had using any other device. Long gone are the days of robotic automated customer care centers. Instead with advancements in machine learning and natural language processing, interactions with brands and devices through a VUI are quickly becoming more ‘human’ and less robotic by putting emphasizes on technical advancement based on a culture of human centricity.

Next up; How the people at BBH Stockholm experiment and innovate with voice technology.